1

Software documentation can be defined as,

an artefact whose purpose is to communicate information about the software system to which it belongs.

Its role in a software project is not well understood. Many software professionals profess to the importance of documentation in a software environment without a strong grasp of what constitutes quality documentation. In fact, documentation in practice is frequently incomplete and rarely maintained and hence is often believed to be of low quality. Yet, software continues to be designed, constructed and delivered. The documentation problem is usually described as either a lack thereof leading to incomprehensible code; or as something unhealthy that hinders the understanding of the system on a whole because the right documents and appropriate information are difficult (if not impossible) to locate and properly utilize.

The seemingly common-sense approach to improving software documentation – that is, properly documenting all aspects of a software system while maintaining its integrity as changes occur – may not apply, at least in its entirely, to software engineering. At face value, up-to-date, accurate and complete documentation is preferred over outdated, inconsistent and incomplete documentation. However, in the realm of a real software environment, where other factors beyond documentation must be taken into consideration, maintaining documentation may not be the most appropriate or beneficial approach to software development.

Our research will investigate the role of software documentation. Based on our findings, we will introduce a document maintenance model to help cope with the complications arising from the use and maintenance of software documentation.

Since most of a Software Engineer’s time will be spent doing maintenance [52], it seems appropriate that software documentation should be an important aspect of the software process.

But, what constitutes good documentation? Most individuals believe two main requirements for good documentation are that it is complete and up-to-date. We hypothesise that other factors may have a greater impact on documentation relevance than has been previously thought.

We must also consider the issue of the applicability and usefulness of a document. Can complete and up-to-date documents that are rarely referenced or used be considered of high quality? What consideration should be given to incomplete, highly referenced documents that appropriately communicate to their intended audience?

To gauge the quality of documentation, factors beyond its completeness and being up to date should at least be considered when discussing documentation relevance.

Our work is driven to uncover factors, preferably measurable ones, that contribute to (or hinder) documentation relevance. We hope to exploit the effects of these factors to better predict the effectiveness of a document based on the current environment where that document exists.

During our interactions with software professionals and managers, we observed that some large-scale software projects had an abundance of documentation. Regrettably, little was understood about the organization, maintenance and relevance of these documents.

A second observation was that several small to medium-scale software projects had little to no software documentation. Individuals in these software teams said they believed in the importance of documentation, but timing and budget constraints left few resources to fully (and sometimes even partially) document their work.

The primary questions arising from the above interactions include:

· How is documentation used in the context of a software project? By whom?

· How does that set of documents favourably contribute to the software project (such as improving program comprehension)?

· How can technology improve the use, usefulness and proper maintenance of such documentation?

Team members of one of the large-scale projects sought practical solutions to organizing and maintaining documentation knowledge. Meanwhile, the individuals in the smaller projects were looking for the benefits of documentation from both a value-added and a maintenance perspective.

Based on the observations above, our research objectives are summarized as follows:

· Uncovering current practices and perceptions of software documentation based on the knowledge and expertise of software professionals.

· Exploiting the information gathered from software professionals to help create suitable models to better define and gauge documentation relevance.

· Implementing an appropriate software tool to put into practice the models uncovered above to better visualize documentation from a relevance perspective.

· Validating our documentation models by experimenting with our approach under several software project parameters.

Our research presents important findings for various reasons and to several audiences:

· Individuals interested in documentation technologies can use the research to better understand which existing technologies may be more appropriate than others, and why.

· Software decision makers can use the data to justify the use and selection of certain documentation technologies to best serve the information needs of the team.

· Using the results of our work, better documentation tools and technologies might emerge. Software designers will have a better grasp of the role of documentation in a software environment and under what circumstances it is, or can be, used.

· Our proposed models of documentation relevance provide a framework from which more feature-rich applications can be explored to better support the array of functionality offered by our models.

To a smaller extent, our work also contributes a body of knowledge relating to other facets of documentation including the current use and perception of software documentation tools and technologies. This information could be useful to individuals involved in documentation engineering, or that are involved in the documentation process.

The thesis begins with a background of the related work and literature that was referenced throughout our research. Following this, we introduce our empirical study of software documentation, followed by an analysis of the results. This analysis forms the basis and justification of our work. We analyzed the data from a document relevance perspective, looking at how documentation is presently used as well as how its attributes and external factors affect its usefulness. We then analyzed the results from a document engineering perspective, looking at how technology is both currently used and potentially could be used in the software documentation domain.

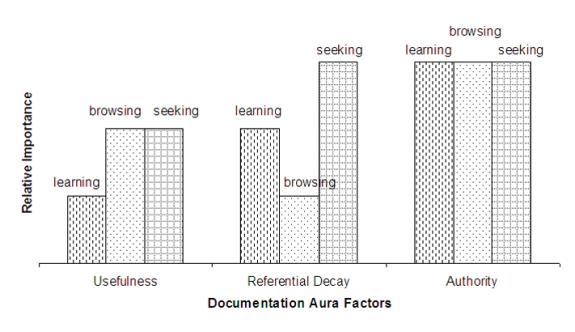

Following our analysis of current software documentation practices, we will introduce the concept of document Aura as

a prediction of an artefact’s relevance in a particular situation based on an artefact’s usefulness, referential decay and authority (hence, Aura). A measure of Aura is a prediction based on the environment in which an artefact exists as well the conditions under which the prediction is requested.

Drawing from other computer science domains that concern profiling, ranking and relating information, as well as using basic heuristics about information and documentation, we will describe a model to track and gauge documentation maintenance. We will describe the software architecture and implementation of our model. After which, we will evaluate the effectiveness of our ideas by studying how developers interact with our model (implemented as a software documentation tool) during software maintenance.

For more information about the vocabulary used throughout this document, the following two options are available. First, a glossary is available at the end of this document. Second, most glossary terms are defined within the document, on its first use; such as document Aura above.

In detail, the chapters have been organized as followed.

Chapter 2 reviews the available literature about software documentation and introduces the background essential for the remainder of our work.

Chapter 3 discusses the survey we performed in April 2002. The chapter also provides preliminary results and analysis based on the survey data.

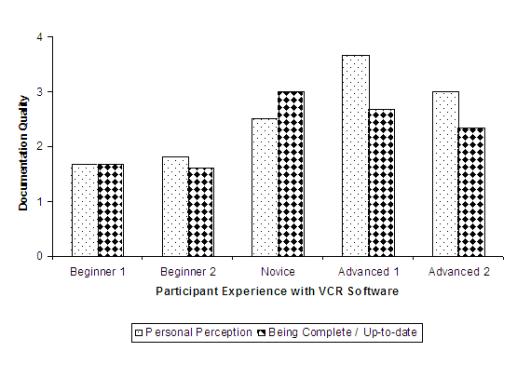

Chapter 4 elaborates on the findings presenting in Chapter 3. In particular, this chapter focuses on the current roles and perception of documentation. This chapter presents a hypothesis suggesting low correlation between documentation quality and documentation maintenance.

Chapter 5 analyses the survey results from a documentation engineering perspective. The chapter highlights the current use of documentation technologies and the participants’ preferences (and aversions) towards those technologies. This chapter introduces the requirements of a software solution that addresses several documentation issues described in previous chapters.

Chapter 6 investigates an approach to modeling documentation relevance; known as the Aura of a document. The metrics we have applied to predict relevance are based on the analysis of related literature, including heuristics of knowledge ranking and coordination, and our findings from the survey we conducted on documentation.

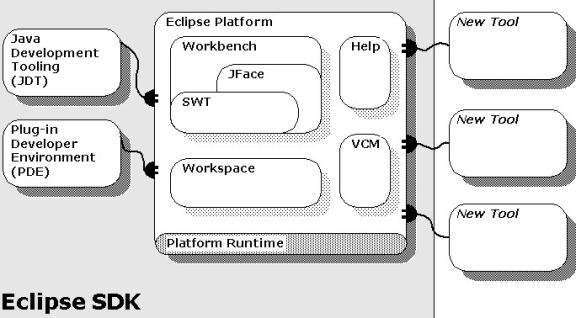

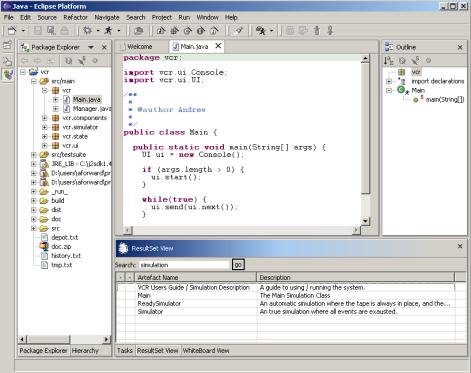

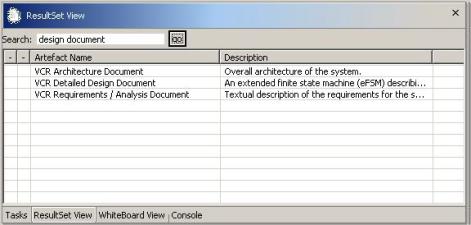

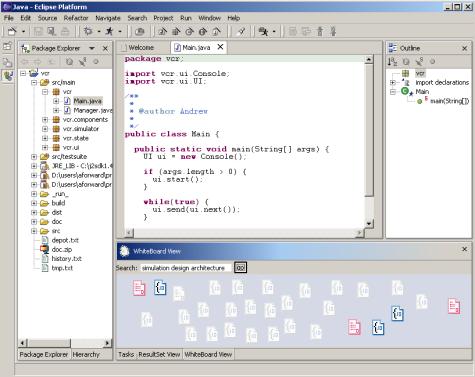

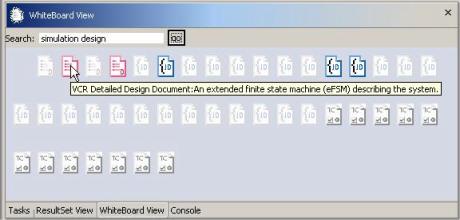

Chapter 7 describes the software implementation of a system that tracks document Aura. This tool aims to manage project artefacts to better predict their future need (as both a source of information during searching and browsing and tasks related to document maintenance). The software is the culmination of the research presented in previous chapters.

Chapter 8 provides empirical evidence suggesting the value of tracking document Aura. Our experiment involved novice and expert users of a small VCR software simulator system.

Chapter 9 presents conclusions, future research, and summarizes to what extent we achieved our objectives.

2

The literature we consulted throughout our research has been focused on the following areas of study:

· Software Documentation. Obviously we are concerned with past research that helps define the role of documentation in a software environment as well as provides an analysis of the contribution of documentation to a software project.

· Software Engineering Principles and Heuristics. Looking beyond documentation engineering, we were interested in integrating proven software engineering practices into the realm of documentation.

· Information metrics. This section analyzes how information is processed, ranked and prioritized. Much of the work in this section has typically been applied to fields outside that software documentation, but may in-fact be quite useful.

2.1 Defining Software Documentation

This section investigates the perceived purpose of documentation in a software environment. First we investigate the idea that documentation is more than textural descriptions of a software system, but more importantly, a form of communication among team members. Next, we compare the idea of models versus documentation. After which, we analyse the role of documentation in a software project and finally we discuss the perceived quality of documentation based on its being up-to-date.

Documentation standards proposed by the IEEE (including 829, 1074) and ISO (such as 12207, 6592, etc) help define the contents of software documentation under certain situations, Our approach seeks to define documentation at a much more abstract level. Although it is important to understand and define what the contents of software documentation should be (as done by the standards listed above), we must first understand the goals of software documentation as a whole.

2.1.1 Documentation is communication

Scott Ambler [1] describes the critical components of software documentation from an agile perspective. We believe Ambler’s descriptions of documentation are suitable for most software projects, agile or not.

The key elements in Ambler’s list of recommended documentation traits describe a fundamental issue of documentation being communication, not documentation [1].

Andrew Hunt and David Thomas [26] explain that communication is achieved only when information is being conveyed. Based on the assumption that documentation is communication, the goal of a particular software document is to convey information. This information may not necessarily be completely accurate or consistent.

Conversely, Brian Baurer and David Parnas [7] argue that precise and mathematical documentation improves software maintenance. They believe that it is to everyone’s advantage to invest in preparing and maintaining such documentation. Although Baurer and Parnas’ results did agree with their original hypothesis that mathematical documentation improves maintenance, we do not believe the efforts to produce and maintain precise mathematical models of a software system are feasible for software professionals in the industry as a whole. We do not disagree with the premise of the results, which is, that mathematical documentation improves maintenance. Instead we are concerned with the applicability of their approach to documentation. The paper reports on a project with only 500 lines of code and the techniques were applied in an educational environment. Several real-world constraints were not applicable to their case study and, as such, the results are probably ill-suited for documentation in practice.

Ned Chapin [14] states that the value of software can be determined by many factors. One of the four main issues related to software value is its documentation. Chapin describes several main concerns regarding documentation: quality, obsolescence or missing content. To preserve and enhance software value, Chapin recommends to “keep the documentation current and trustworthy” [14]. It is important to note that merely being current and trustworthy do not necessarily imply applicable or useful. In a separate paper [13], Chapin states that as content becomes obsolete, confidence decreases and the cost of software maintenance increases.

Ryszard Janicki, David Parnas and Jeffery Zucker [27] describe the use of relations (represented as tables) as a means of documenting the requirements and behaviour of software. Their work highlights two key limitations to current documentation practices. Janicki et al state that documentation continues to be inadequate due to the vagueness and imprecision of natural languages. These individuals believe that even the best software documentation is unclear. Janicki et al suggest that the limitation in natural languages is a primary cause of informal documentation. They further add that this informality of the documentation in turn cannot be systematically analysed, resulting in inconsistent and incomplete information. Janicki et al then present a solution to building consistent and complete documentation by describing software using tabular mathematical relationships. Although we agree with the motivation for their work, their claim that documentation must be complete and consistent can be somewhat misleading as it ignores what we believe to be the fundamental issues of documentation; communication. Instead, we accept that by understanding the limitations of our ability to communicate effectively, we are better able to deliver artefacts that serve a communication need.

Alistair Cockburn, author of Agile Software Development [15], further describes the limitations of our ability to communicate by stating,

“We don’t notice what is in front of us, and we don’t have adequate names for what we do notice. But it gets worse: When we go to communicate we don’t even know exactly what it is we mean to communicate. The only thing that might be worse is if we couldn’t actually communicate our message.” [15] (page 7-8)

Cockburn continues by saying that managing the incompleteness of communications is the key to software development. In regard to software documentation, Cockburn believes that documentation should be sufficient to the purposes of the intended audience.

Cockburn compares software development to a game whose two primary goals are to “deliver the software and to create an advantageous position for the next game, which is either altering or replacing the system or creating a neighboring system” [15] (page 36). Cockburn also describes intermediate work products as any artefact products that are not directly related to the primary goals of the software game. Cockburn then describes the importance of intermediate work products as follows.

“Intermediate work products are not important as models of reality, nor do they have intrinsic value. They have value only as they help the team make a move in the game. Thus, there is no idea to measure intermediate work products for completeness or perfection. An intermediate work product is to be measured for sufficiency: Is it sufficient to remind or inspire the involved group?” [15] (page 34)”

Alistair Cockburn makes an important comment about intermediate work. First, it is our assertion that most software artefacts apart from the system itself represent intermediate work, including software documentation. Although we believe these artefacts are important to the success of the software game, they are not directly related to the prime goals of software; that is, delivering software. Hence, using metrics based on completeness and being up-to-date are inadequate at reliably ranking documentation effectiveness. Instead, metrics that reflect sufficiency would provide a more appropriate technique to document ranking.

Scott Ambler delivers a similar message in his writings about agile documentation [1]. We believe the strength of documentation lies in its capacity to deliver knowledge and fulfil a communication need and not necessarily to reflect the most current, complete or accurate reflection of the system it describes. As a consequence, metrics that provide insight about, or attempt to predict, a document’s ability to illicit knowledge in its readers would contribute to improved documentation ranking techniques.

2.1.2 Software Models Versus Documentation

Scott Ambler describes a software document as “any artifact external to source code whose purpose is to convey information in a persistent manner” [1], and a software model as “an abstraction that describes one or more aspects of a problem or a potential solution addressing a problem” [1].

Ambler describes the relationship between models, documents and documentation below in Figure 2‑1.

Figure 2‑1: The relationship between models, documents, source code, and documentation. [1]

Ambler describes models as temporary artefacts that may or may not be transformed into a permanent document and form part of the software documentation.

We agree that some artefacts, such as software models, may have a temporary lifespan relative to other artefacts. However, our perception is that models and documents are both merely subsets of the notion of documentation as a whole. Documents need not be permanent artefacts in a software system, nor must all models be disposable. Documentation is an abstraction of knowledge about a software system and only as long as a document or model (or any other artefact) can effectively communicate knowledge does it forms part of the project’s software documentation, even if only for a short lifespan. In fact, we also concede that the source code itself is one type of artefact as it, too, conveys knowledge about the software system.

2.1.3 The Role of Documentation

Bill Thomas, Dennis Smith and Scott Tilley [51] raise several fundamental questions in their discussion about software documentation. Our work will attempt to shed light on the following questions from Thomas et al.:

· What types of documentation does a software engineer (or support staff member) need?

· Who should produce, maintain and verify documentation to assure an appropriate level of quality?

· Why should documentation be produced at all?

Benson H. Scheff and Tom Georgon [45] have one perspective on some of the questions above. They believe that software engineers “do the software engineering part” [45], while documentation ‘systems’, which consist of support staff and automated tools, do the rest.

According to Scheff and Georgon’s perspective, the role of the software engineer with regard to documentation includes:

· providing templates

· identifying the document content and format

We believe there are two inherent problems when excluding software engineers from the documentation as described above. First, although documentation templates do provide an efficient framework for re-use, the communication (and hence information) needs differ among software projects. Therefore templates will not always provide the most efficient solution based the unique needs of the software project. Second, as most concede and Abdulaziz Jazzar and Walt Scacchi affirmed in [28], much of the knowledge about a software system is in the minds of the software engineers. Based on the complications arising from communication as described by Alistair Cockburn [15], the intermediary transfer of knowledge will most likely result in lower quality documentation.

Our opinion that software engineers must be more involved in software documentation than Scheff and Georgon recommend is described below. Our opinion is based on the idea that the translation between sources, such as from an engineer to a technical writer, introduces noise into the translation and may distort the message. In particular, Scheff and Georgon’s approach would involve a translation from the primary source (the software engineers) to a secondary source (the documentation systems described by Scheff and Georgon). As well, their approach would also require a translation from the documentation system to the actual documentation. As such, it is probable that Scheff and Georgon’s approach would introduce a greater amount of noise into the system than a more direct approach to creating documentation.

Bill Curtis, Herb Krasner, and Neil Iscoe [17] believe that documentation should focus on how requirements and design decisions were made, represented, communicated and changed over the lifespan of a software system. As well, documentation should describe the impact of the current system on future development processes. Their study involved interviewing personnel from seventeen large software projects. Their analysis focused on the problems of designing large software systems; but many results report directly about the use (and misuse) of documentation in a software project.

Curtis et al found that behavioural factors (such as team members or other individuals of the same organization) had a substantial impact on the success of a project and were far more important than the tools or methods used by project team members. Their work describes five levels of communications involved in developing software. For our purposes, we are concerned only with their findings relating to the individual, team, and project levels (for our research we are not concerned with the company and external levels described by Curtis et al.).

Curtis et al found that most individuals indicated being frustrated with documentation and its weakness as a communication medium. They also found little evidence to support the claim that documentation reduces the amount of communication required among project team members. At the individual communication level, Curtis et al describe two main documentation vulnerabilities.

· First, there is a trade off between using verbal communication (useful for current development) and written documents for future project members.

· Second, many good software engineering communication practices were found to be impractical in a large software project environment. Curtis et al. did not explicitly list which practices were found to be impractical, but their work alluded to the idea that as a project team grows so do its communication needs; something which current communication processes cannot properly coordinate.

At the team level, Curtis et al found documentation to be inadequate at providing information to resolve misunderstandings concerning requirements or design.

Curtis et al concluded that large projects do require extensive communication that was not reduced by documentation.

Although Curtis et al focussed primarily on the communications needs of large software projects, we believe their work with regard to the effectiveness of documentation is important to projects of all sizes: size only magnifies the problems. Our work provides statistical data that agrees with some of the issues Curtis et al identified about software documentation. We will also offer insight that may improve the ability for documentation to communicate.

Michael Slinn [49], a technical business strategist, contacted several documentation managers (including ones at IBM, Rational Systems and Sun Microsystems) asking the cost of software documentation to an organization. One manager reported, “we just assume that documentation is important, and try to do the best we can with the resources that are made available to us. We have never considered measuring the impact of the quality of work that we do on the success of the product, or the product’s profitability” [49]. As opposed to merely invoking a documentation process based on assumptions, Scott Ambler [1] suggests that all documentation fulfills a purpose. If you do not have a specific purpose for a particular document (or for its maintenance, beyond just being out-dated), then you should either reconsider the need for that artefact or determine its purpose before investing resources in its production (or maintenance) [1].

Jazzar, Abdulaziz and Walt Scacchi [28] conducted an empirical investigation concerning the requirements for information system (IS) documentation. Jazzar and Scacchi's work resulted in eight hypotheses that attempt to model the requirements for achieving effective and quality documentation products and processes, stating:

“The problem [concerning IS documentation], therefore, has been generally formulated in terms of the difficulties in developing and maintaining documents as products with a set of desirable characteristics, but without regard to the processes and organizational settings in which they are produced and consumed.”

Here we note that Jazzar and Scacchi’s findings indicate that a fundamental issue regarding the problems associated with software documentation have been omitted when considering process improvements. Others view the problem of creating and maintaining documentation without properly understanding the environment in which these documents are created, used and are useful to the project team. Jazzar and Scacchi’s comments are in-line with our own. We believe that greater emphasis should be placed on the environment where documents exist to successfully cope with the issues revolving their use and effectiveness. These environmental factors may include how often a document is accessed and under what circumstances, how documents relate to one-another (provided this information is not inherent to the document itself) or how often documentation is maintained. Having a better understanding of how and under what circumstances documentation is produced and consumed will help us to better understand what to offer the audience and how to maintain their attention.

Jazzar and Scacchi address the drawbacks of several proposed

solutions that promote documentation traceability, standardization,

consistency, conversion into hypertext and ease of document development and

online browsing. According to their

findings (and despite standards in documentation) documentation continues to be

unavailable, inconsistent, untraceable, difficult to update and infrequently

produced in a timely or cost-efficient manner [28]. Jazzar

and Scacchi’s findings confirm the important existence of an implicit knowledge base

apart from the documented one. This undocumented knowledge base is captured in

the minds of the software professionals. Jazzar and Scacchi report that

when maintainers left a project, the documentation, which was once thought to

be sufficient, was no longer able to address the information needs of remaining

team members [28].

One might argue that all aspects of the system should be completely documented as insurance against losing the implicit knowledge base of the development team. Unfortunately, as discussed by Bill Curtis, Herb Krasner, and Neil Iscoe [17], this approach is unfeasible because of the impracticality of knowing all of the future documentation needs of project; much like the difficulty in knowing all future requirements of a software system. Curtis et al also believe such an effort will be unable to effectively communicate these future needs to maintainers. Berglund [9] and Glass [25] raise the issue about content overload, whereby having too much information reduces the overall effective of the information due to sheer size. Johannes Sametinger [44] notes that documentation is important, especially for software reuse, but traditional documentation techniques are unable to meet the needs of software professionals. Similarly, Scott Tilley [52] comments on the deficiencies of traditional software documentation techniques. Cockburn also notes that it is extremely inefficient to break down communication into units so that anyone can understand them [15] (page 19). To summarize, Cockburn offers that,

“The mystery [about

communication] is that we can’t get perfect communication. The answer to the mystery [about

communication] is that we don’t need perfect communication. We just need to get close enough, often enough.”

[15] (page 19)

We agree with Cockburn’s statements that the role of documentation in a software project is to offer appropriate communication to the reader, often enough.

Jazzar and Scacchi’s eight hypotheses discussed above offer the following insight. Backbone systems require a more formal documentation process than non-backbone projects (H1) and require high traceability (H2). The same is true for out-of-house development compared to in-house development (H3 and H4). In-house project documentation can accommodate low consistency and completeness, but requires accurate details (H5) and less documentation (H6). Users unfamiliar with similar systems follow a different documentation process than familiar users (H7) and the unfamiliar users require documents that are more consistent, complete and traceable (H8).

H7 and H8 are fairly intuitive and are best explained by Cockburn’s description of the learning process [15]; that is people follow three distinct stages of behaviour when learning; following, detaching, and fluent. Individuals begin as followers and require explicit details and instructions regarding their work. Next, these individuals detach from the prescribed process and seek alternatives when the process breaks down. Eventually, the individual becomes fluent in the skill and it is almost irrelevant whether a prescribed technique is followed or not. The fluent individual applies what is appropriate (knowing what is appropriate is part of becoming fluent) [15].

H1 to H6 recommend general documentation guidelines for software projects in terms of the documentation size (i.e the number and amount of documentation to produce), the level of traceability of the documents as well as the level of formalness in their structure and content. Our work complements Jazzar and Scacchi’s as we focused our initial work on the attributes of quality documents, whereas Jazzar and Scacchi’s focus was primarily on the process of quality documentation.

2.1.4 Comparisons to Other Engineering Disciplines

Often the perception of a continuous and consistent need for complete and up-to-date documentation results from comparing software engineering practices to those of other engineering domains such as civil, mechanical or electrical engineering. The argument that software documentation should always be consistent, complete and up-to-date purely because that is the practice in other engineering domains may not always be valid or appropriate in software engineering.

As Sandro Morasca points out in [39], Software Engineering is young discipline, “so its theories, methods, models, and techniques still need to be fully developed and assessed” [39] including those regarding the notion of proper software documentation. Software Engineering is quite a human-intensive domain where “some aspects of software measurement are more similar to measurement in the social sciences than measurement in the hard sciences” [39]. As a result, the need for hard documentation may be less important than having social documentation (i.e. artefacts that can communicate at a social level as opposed to merely a technical one).

Other engineering domains produce specifications from which a product is derived and usually mass-produced (either in numbers such as computer micro-chip or in size such as a office building or bridge). These specifications need to be precise; otherwise the final product(s) will not fulfil the intended purpose. Comparing this analogy to software engineering, a system’s source code and build mechanism outline the specifications of the product and the compiler helps derive the mass produced product (i.e. the byte code). Software documents from an engineering standpoint are indeed complete and up-to-date in a similar fashion compared to other engineering domains. As software becomes more complex, an additional layer of more human-digestible information is required. The purpose of such a layer, as we have described in this section, is that of communication; which we argue does not imply being completely accurate or always up-to-date.

2.1.5 Perceptions of Being Out-Of-Date

Andrew Hunt and David Thomas [26], as well as several others (for instance [25], [41], and [45]), see little benefit in old documentation. In fact, Hunt and Thomas state that untrustworthy documentation poses a greater threat than no documentation at all.

Miheko Ouchi [41] shares a similar view with Hunt and Thomas. Ouchi associates being up-to-date with being correct, and conversely not-so-up-to-date with being incorrect. Ouchi then infers that incorrect content is unreliable, and being unreliable hinders the document’s credibility, which ultimately reduces its effectiveness. The belief in high correlation between accuracy of content and usefulness is also shared by Glass [25], and Scheff and Georgon [45]. As well, similar thoughts about the importance of maintenance are outlined by Bill Thomas et al [51]. The underlying assumptions from these individuals appear to be that documentation is useful only to extent to which it is a correct and accurate representation of the system. Although being correct (and hence up-to-date) are important factors contributing to relevant documentation, they are not the only factors.

Alistair Cockburn [15], as well as Scott Ambler [1] present an alternate view concerning the role of documentation. They argue that the purpose of documentation is to convey knowledge – something that can be different from merely providing information.

Alistair argues that source code presents the facts of a system and the supporting documents facilitate higher-level interpretation of those facts. A document that instils knowledge in its audience can then be deemed effective, somewhat regardless of its age and the extent to which it is up-to-date [15].

Ambler recommends to “update [software documentation] only when it hurts” [1]. Ambler ads,

“Many times a document can be out of date and it doesn’t matter very much. For example, at the time of this writing I am working with the early release of the JDK v1.3.1 yet I regularly use reference manuals for JDK 1.2.x – it’s not a perfect situation but I get by without too much grief because the manuals I’m using are still good enough.” [1]

To argue against constant and continual documentation maintenance, at the expense of other tasks (such as designing, constructing, testing and delivering software), Ambler further adds,

“It can be frustrating having models and documents that aren’t completely accurate or that aren’t perfectly consistent with one another. This frustration is offset by the productivity increase inherent in traveling light, in not needing to continually keep documentation and models up to date and consistent with one another.” [1]

Part of our work is geared towards uncovering factors beyond completeness and accuracy that contribute to a document’s relevance. Similarly, we will investigate the merits of Alistair and Ambler’s assertions that documentation need not be completely up-to-date or accurate to be useful.

2.2 Software Engineering Principles and Documentation

In the following sub-sections we will collect ideas from existing knowledge about software engineering practices in general and relate that knowledge to the application of sound documentation processes.

Andrew Hunt and David Thomas [26] present several views that define a pragmatic programmer as

one who is agile (in the sense of being early to adopt and fast to adapt to new technologies and techniques; not necessarily agile in the extreme programming sense), inquisitive, critical thinker, realistic and well-rounded [26].

Hunt and Thomas’ insight into quality software development practices and software process heuristics provides appropriate parallels that are applicable to software documentation.

For instance, Hunt and Thomas suggest avoiding broken windows. A broken window in software documentation is

any artefact of a software system that elicits poor design, incorrect decisions or poor code [26].

The definition above does not imply that software artefacts must be perfect. Rather, it is important to maintain the system in a high state of quality (both internal and external). Attempts to improve areas of poor quality in a system should be a priority. Working in an environment of high quality promotes a continued level of high quality work. To align this statement with software documentation, we do not imply that a document refers to a document that is out-dated. Instead, we believe that documentation breaks when it no longer fulfils its purpose in an efficient manner.

The DRY principle (don’t repeat yourself)

that every piece of knowledge must have a single unambiguous, authoritative representation within a system [26]

is well described in the context of software construction. Techniques such as abstraction, encapsulation and inheritance all attempt to follow and promote the DRY principle. Even many programming languages including Java and .Net promote DRY principle with the idea of write one, run everywhere. Techniques, tools and technology attempt to provide similar mechanisms to facilitate the DRY principle to software documentation. Unfortunately, we believe that duplication will continue to exist in software documentation for several reasons. First, our inability to seamlessly relate knowledge among various level of information abstraction hinders our ability to effectively cross reference knowledge. For example, the translation of requirements to specifications and design, at present, will result in an overlap of information and as one changes, it is likely the other related documents will require changes as well. Second, the current impracticality of automating all processes that require duplicate knowledge suggest that duplication will be tolerated under circumstances where the effort to eliminate duplication is far greater than the effort to manage that duplication. For example, summarizing information from various sources for a presentation by cut and paste is more appropriate than parsing the information to automatically generate the report based on the existing collection of documents; especially if the presentation will only be used on one occasion. Third and related to the second issue, current tools and technologies do not always allow individuals to effectively follow the DRY principles. For example, sharing information between file formats (such as .html, .doc and .txt) is usually best achieved by duplication.

Although striving to achieve the DRY principle is important, it is equally important to accept that duplication will occur. Rather than trying to avoid duplication altogether, a more realistic goal should be to manage and minimize your project’s duplication. To help follow the DRY principle, Hunt and Thomas suggest that all secondary sources of knowledge result from a derivation of the primary source [26]. Although not practical for all situations, they suggest creating small tools and parsers to manage these secondary sources. Some practical examples available to help reduce duplication include tools such as Ant to distribute your software, JavaDoc to publish the software's API and JUnitReport to publish the software's testing results. JUnitDoc is another interesting tool that builds JavaDoc API documentation, but it also integrates associated test cases based on JUnit into the API documentation.

Learning to identify and manage sources of duplication will help us to remove them, or at least to minimize their adverse effects on the software project as changes occur.

2.2.3 Good enough means more than just enough

Andrew Hunt and David Thomas [26] describe the concept of good-enough software. We have adapted their definition to align with the principles of good enough in regard to software documentation. Good enough documentation does not imply low or poor quality. Rather, it is an engineered solution that attempts to find a balance between forces that are in-favour and opposed to documentation. Such forces include stakeholders, future maintainers, technical limitations, budget and timing constraints, managerial and corporate guidelines as well as personal state of mind.

The notion of good-enough; or perhaps more suitably, appropriate, should be applied to the context of software documentation. For instance, and as cited in Section 2.1.4, many individuals believe that documentation is practically useless unless it is a well maintained and provides a consistent and accurate view of the system it documents. Context free, it seems obvious that up-to-date documents are better and more useful. However, when forces such as budget and timing (including the time to actually implement the software) are incorporated into the decision making process of a software project, several trade-offs are necessary. One side effect may be the extent of documentation and the extent to which it is maintained. To re-iterate, using an engineered approach to software projects, the most appropriate documentation may be documentation of perceived lower quality. Scott Ambler also concedes that documentation need only be good enough to be effective [1].

A similar point by Hunt and Thomas is to understand when to stop; to understand when a system, or in our situation documentation, is good-enough.

Another relevant observation to consider is that as a software project evolves, so too do the needs of documentation. Scott Ambler describes the issues concerning the changing needs of documentation. In particular, Ambler says that

“during development you’re exploring both the problem and solution spaces, trying to understand what you need to build and how things work together. Post-development you want to understand what was built, why it was built that way, and how to operate it” [1].

Therefore maintaining development documentation may be counterproductive to the needs of the software team once the software reaches post-production.

Our work hopes to substantiate Ambler’s claim that documentation needs change throughout a project’s lifecycle. We hope to use this information to further support our claim that quality software documentation need not always be as consistent and accurate to be effective.

Cockburn warns that due to the limited resources of a software project, additional efforts to improve intermediate work products, such as documentation, beyond its purpose are wasteful. Knowing the point of diminishing returns when creating these intermediate artefacts allows individuals to better reach the point of sufficient-to-purpose. [15]

The evolution of the needs of software team members further supports the multi-dimensional considerations required when considering documentation maintenance. Alone, updated documentation is far more useful than outdated documentation; but when considering the environment in which the documentation must exist, the importance of being up-to-date is not as well understood, and perhaps is not as important a factor in software quality, or even documentation quality, as one may believe.

2.2.4 Applying Reverse Engineering Concepts to Documentation

Tools that can extract information directly from source code could be a very useful artefact for a software system.

Scheff and Georgon [45] describe the requirements for effective documentation automation. In particular, automation demands:

· Completely defined inputs

· Responses to predetermined items

· Unambiguous text

· Standard use of language and format

· Mandated output for contractual requirements

Unfortunately, their description is quite vague, and somewhat ambiguous. It is difficult to truly understand their intentions with regard to the requirements for effective software documentation.

Bill Thomas, Dennis Smith and Scott Tilley [51] provide a clearer picture of the objectives of automation in software documentation. They describe reverse engineering in the context of documentation as one way to produce, and more importantly maintain, accurate documentation.

Thomas et al [51] describe the role and objectives of reverse engineering tools as follows:

"These [reverse engineering] tools create ancillary documentation, provide graphical views of the software system, and generally attempt to augment the knowledge hidden in the source code with secondary structures."[51]

However, Thomas et al believe such automated tools are underused. These tools could be helpful, but unfortunately little is known about what types of documentation are useful and to whom and under what circumstances. "This [observation outlined above] highlights the underlying problem with current documentation creation processes; if no one understands what is needed, it should come as no surprise that tools that produce this type of documentation are rarely used by real-world software engineers"[51].

Janice Signer et al.’s study of software professionals ([47] and [48]) provides some insight into the professional needs of software engineers. Unfortunately, Singer et al.’s work reported mixed results regarding the use of documentation. The results of their questionnaire revealed that the most cited activity of the participants was reading documentation. Yet, their follow-up studies observing software professionals as they work, Singer et al. found documentation was consulted on a much less frequent basis. “Clearly, the act of looking at the documentation is more salient in the SEs’ minds (as evidenced by the questionnaire data) than its actual occurrence would warrant.” [47].

Our work is focussed at better understanding the role documentation in a software project and how it is created and manipulated in a software environment. From this improved understanding, we hope to improve processes of documentation maintenance and inspire new and / or improved technologies to better automate documentation.

2.3 Software Documentation Metrics

Having the ability to rate the effectiveness of a software document using formulated techniques having quantifiable metrics is a useful tool for knowledge management because it allows us to rank documentation based on measurable attributes. Although measuring relevance is difficult due to the subjectivity of the definition. We hope that our efforts will improve our ability to predict the relevance of a document based on heuristics about relevance, both within the context of documentation as well as taken from similar domains in computer science.

2.3.1 Limitations of Readability Formulas

To date, one of the most publicized means of rating the content of documentation is the application of readability functions. These functions consider metrics such as sentence structure and length, as well as grammatical writing style, to place a grade level on a piece of writing.

In a technical environment many individuals disagree about the usefulness of such measures at predicting the relevance of document. In particular, George Klare revisited his 1963 his book 'The Measurement of Readability” in Readable Computer Documentation [32]. Klare states that he is sceptical about applying readability formulas to computer documentation. Klare believes that readability formulas do not perform well for lists, tables, structured text and therefore are unsuitable to most writing found in software documentation. Klare also notes the relationship between readability and comprehension; believing that improving readability increases reading speed and acceptability but comprehension of the document is not guaranteed. Klare notes that a major problem is that there are few usability studies on the quality of documentation [32].

Janice Redish [43] expanded on Klare revised work. Redish emphasizes that readability formulas have even more limitations than Klare suggested. Redish points out that readability formulas were developed for children’s school books, not technical documentation. She also notes that most readability formulas ignore the effects of content, layout, and retrieval aids (indexing for example) on text usefulness.

Redish advocates usability testing over readability formulas, even if only a few individuals are involved in the testing. Redish states that readability formulas predict only the level of reading ability required to understand a document and provide no insight in the causes or potential solutions to the problems found in a document. On the other hand, Redish says that usability studies not only demonstrate the extent to which actual people are able to understand a document, but also are able to identify where certain flaws in the document exist.

Although readability studies may offer some insight in the usefulness of documentation, we concur with Redish’s observations and beyond our acknowledgement that readabilities have been used to gauge the quality of a document: we will not incorporate these formulas in our work.

2.3.2 Applying Buffer Management Techniques to Documentation Relevance

This section highlights past work on buffer replacement and parallels their findings to several documentation problems.

We start by introducing the work of Björn Jónsson, Michael J. Franklin and Divesh Srivastava [29]. These individuals introduced buffer replacement techniques to improve information retrieval in 1998. They focussed on exploiting the well established buffer replacement techniques from the database community in the realm of information retrieval. Jónsson et al’s work involved information retrieval at a query level and sought to efficiency avoid excessive processing on multiply-refined searches based on access patterns to similar queries. Our work is seeking to apply these buffer replacement algorithms at an even more abstract level; that is, ranking the relevance of a document against the environment in which it exists based on activity within that environment.

To select a buffer replacement technique suitable to documentation, we look to Lee et al’s [34] work on techniques that combine the recency and frequency of access patterns of a particular item in a set. They show that combining the elements of recency and frequency of use greatly improves buffer management. To demonstrate the parallel of cache block replacement policies to software documentation relevance, we have extended Lee et al definition of block replacement policies [34] to apply, more generally, to knowledge management as:

the study of the characteristics or behaviour of workloads to a system. In particular, it is the study of access patterns to knowledge within a knowledge base and is based on recognition of access patterns through acquisition and analysis of past behaviour and history.

Lee et al [33] introduced the Least Recently / Frequently Used (LRFU),

a buffer management policy that subsumes both the Least Recently Used (LRU) and Least Frequently Used (LFU) policies,

approach to buffer management. We will incorporate this metric to assist in our calculation of documentation relevance. We have chosen LRFU due to its ability to combine the benefits of both popular techniques, Least Recently Used (LRU) and Least Frequently Used (LFU).

The LRU approach

is a buffer management policy that ranks blocks by the recency with which they have been accessed.

LRU bases its decisions on how recently documents have been

accessed. Although highly adaptable, it

is criticized for being short-sighted; that is, it only considered recent

history when gauging the relevance of a block. We also considered, but later

rejected, an improved LRU algorithm (known as LRU-K described by O’Neil in [40]) because of the somewhat arbitrary nature of the ![]() parameter – which is

used to improve LRU’s tendency to be short-sighted.

parameter – which is

used to improve LRU’s tendency to be short-sighted.

The LFU approach

is a buffer management policy that ranks blocks by the frequency with which they are accessed.

LFU is based on the frequency with which documents have been accessed. Although it is often used, LFU results can be deceiving due to the issues of locality where a document is accessed frequently in a small time frame, but overall is rarely referenced [34].

The metric used to rank documents in LRFU is called the

Combined Recency and Frequency (CRF) value.

A document with a high CRF values is more likely to be referenced again

in the future based on the LRFU policy.

To incorporate the recency of a document into the CRF value, a weighting

function ![]() is used, where

is used, where ![]() is the time span

between now,

is the time span

between now, ![]() , and the time,

, and the time, ![]() , of the

, of the ![]() access to a document,

access to a document,

![]() . The frequency of a

document’s use is incorporated into the CRF value by adding the sequence of

recency weighting function. The CRF

equation is described below in Equation:

2‑1.

. The frequency of a

document’s use is incorporated into the CRF value by adding the sequence of

recency weighting function. The CRF

equation is described below in Equation:

2‑1.

|

|

Based on the above definition, Lee et al state that

“In general, computing the CRF value of a block requires that the reference times of all the past references to that block be maintained. This obviously requires unbounded memory and thus, makes the policy unimplementable.” [34]

As such, Lee et al transform Equation: 2‑1 into a recursive equation based on the ![]() reference to a

document. This equation is shown below

in Equation: 2‑2.

reference to a

document. This equation is shown below

in Equation: 2‑2.

|

|

|

|

|

|

|

|

|

2 |

|

|

|

‑ |

|

|

|

2 |

|

|

|

|

|

Recursive CRF Value |

|

For Equation:

2‑2 to hold, Lee et al [34] state that the weighting factor, ![]() , must maintain the following property:

, must maintain the following property: ![]() or

or ![]() .

Please refer to Lee et al’s work in [34] for more information regarding the

derivation from Equation:

2‑1 to Equation:

2‑2. Our work will use the weighting factor

described in Equation:

2‑3 and used by Lee et al [34] that satisfy the first property above (

.

Please refer to Lee et al’s work in [34] for more information regarding the

derivation from Equation:

2‑1 to Equation:

2‑2. Our work will use the weighting factor

described in Equation:

2‑3 and used by Lee et al [34] that satisfy the first property above (![]() ).

).

|

|

The function above is used to weight the

importance of recency and frequency with regards to predicting

future access to an artefact. Varying ![]() (

(![]() ) and

) and ![]() (

(![]() ) will allow the weighting function to

exercise the full range of possible combinations of importance of

recency and frequency.

“An intuitive meaning of

) will allow the weighting function to

exercise the full range of possible combinations of importance of

recency and frequency.

“An intuitive meaning of ![]() in this

function is that a block’s CRF value is reduced to

in this

function is that a block’s CRF value is reduced to ![]() of the

original value after every

of the

original value after every ![]() time

steps.” [34].

time

steps.” [34].

Potential future work in our field of document

relevance could investigate the relationship between recency /

frequency of a document’s access to the likelihood of being

accessed again in the near future (based on a ranking

scale). This work could

then provide potentially more suitable parameter values for Equation:

2‑3. Because our work is only

beginning to explore the potential application of buffer

replacement strategies to software documentation, we will use the

standard values of ![]() and

and ![]() to give

equal preference to the frequency and recency of a document’s

access.

to give

equal preference to the frequency and recency of a document’s

access.

Finally, to calculate the CRF value a particular moment in time (since not all accesses are made at the time you wish to consider), you apply Equation: 2‑4. This equation is used to relate the CRF value of an item’s last access to its present value.

|

|

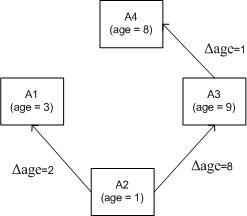

For example, Figure 2‑2 shows the CRF value of a document, ![]() , at time 6 that was accessed at time 1, 3, and

4. To avoid complicating

the process of calculating CRF, we used

, at time 6 that was accessed at time 1, 3, and

4. To avoid complicating

the process of calculating CRF, we used ![]() and

and ![]() for the

example in Figure

2‑2.

for the

example in Figure

2‑2.

Figure 2‑2: An example LRFU calculation

Lee et al [34] have demonstrated that LRFU successfully avoids the limitations of LRU and LFU while exploiting both of their benefits. LRFU is able to reduce the effects of locality without limiting the referenced history. Finally, LRFU algorithm can be adjusted based on the relative importance of recency versus frequency [34]. For the many reasons cited above, our work with software documentation relevance will incorporate the LRFU algorithm.

The use of buffer replacement strategies will help identify the usefulness of a document beyond the content contained within it and will take into account how and when the document is used. This environmental information should improve our ability to locate appropriate information and better prioritize maintenance; much as the application of buffer replacement strategies have accomplished in other similar domains such as information retrieval and database management. Please refer to Chapter 6 for more details about using buffer replacement techniques in predicting documentation relevance.

2.3.3 Authoritative Techniques for Rating Documentation Relevance

Much of the current work regarding ranking and prioritizing information is in regard to exploring the World Wide Web (www). Reflecting on the progress made in this area, and the justifications for certain approaches to predicting the relevance of a document (or page as it is referred to in a www context) should help improve our ability to rank and retrieve information on a smaller scale, such as that present in a software project [36].

Prior to the expansion of the World Wide Web, the work of Botafogo et al. [10] regarding page relevance was focused on stand-alone hypertext environments. Botafogo’s work defined the terms index and reference nodes. An index node is a node whose out degree is significantly higher than the average. A reference node is one whose in-degree is much larger than the average. Botafogo et al considered measures of centrality and authoring pages based on distances between nodes in the hypertext web. Much of their work has been incorporated into improved techniques for using the in and out-degree of a node to predict relevance.

Sergey Brin and Lawrence Page [12] implemented Google, the first search engine that successfully based search results on the link structure of the Web. Brin and Page determined the priority of a page by its page rank,

“an

objective measure of its citation importance that corresponds

well with people's subjective idea of importance.” [12]

As

opposed to averaging merely the in and out-degree of the link

structure, as done by Botafogo et al. [10],

the Google project also weighted each reference; therefore being

associated with relevant pages (for instance pointing to it, or

being pointed by it) increases the weight of that reference and

hence increases the rank of that page.

Brin and

Page also found that prioritizing search results of a text search

based solely on the titles of the pages combined with Page Rank

approach to prioritize the results performed well.

To

define the page rank ![]() of a

page

of a

page![]() , we consider all pages,

, we consider all pages,![]() , that reference

, that reference ![]() where

the total number of references to

where

the total number of references to ![]() is

is![]() . The page rank calculation is shown below in Equation:

2‑5.

. The page rank calculation is shown below in Equation:

2‑5.

|

|

To

maintain the page rank as a probability distribution, a damping

factor, ![]() , usually set to 0.85, is used [12]. The results can be calculated

using an iterative algorithm where the solution corresponds to

the principle eigenvalues of the normalized link matrix. Brin and Page describe page

rank as the probability that a random user will access a

particular page, and the damping factor is the probability that a

user will eventually leave that page.

, usually set to 0.85, is used [12]. The results can be calculated

using an iterative algorithm where the solution corresponds to

the principle eigenvalues of the normalized link matrix. Brin and Page describe page

rank as the probability that a random user will access a

particular page, and the damping factor is the probability that a

user will eventually leave that page.

Jon Kleinberg [33] approaches page relevance from a similar approach to Brin and Page [12], but instead of considering page rank, he considers the authority of a page. Kleinberg investigates the relationship between index and reference nodes (Kleinberg refers to these items respectively as hubs and authorities), building on Botafogo et al’s [10] work to cope with a larger document space.

Jon Kleinberg, like Botafogo et al. [10] (as well as others including [12] and [2]), show that the nature of a hyperlinked environment can be an effective means at discovering authoritative sources. Kleinberg’s study included searching for, and finding relevant results for terms such as ‘Harvard’, ‘censorship’, ‘Java’, ‘Search Engines’, and ‘Gates’. The focus of their work was to improve the process of discovering authoritative pages based on a subset of the available pages (such as the subset returned by text based search).

Kleinberg’s approach complements, as opposed to discounts, text based searching techniques showing that using the results of a text based search (to narrow the result space) as well as the information about the interconnections between documents, almost exclusively, one can produce relevant discoveries of authoritative sources.

In a similar fashion, the page rank algorithm studies little more than the page titles and the anchor titles (the text that describes a particular link). Kleinberg justifies using the in-degree and out-degree of a hyperlinked environment for several reasons. The most prominent justification for using the link structure to determine authority is that using links avoids the problem whereby a prominent page may not be sufficiently self-descriptive. Without considering link structure, these unselfish pages may not rank as highly compared to less authoritative sources (which are more self pronounced). Another justification for using link structures is the fact that text based searches produces a high number of irrelevant results [33].

Kleinberg’s approach requires an initial root set of results that contain some relevant results. This root set was obtained by taking that top 200 results from a popular search engine. The set is then expanded to a base by adding documents that are either referenced by a root set document, or a document that references a document in the root set. Figure 2‑3 illustrates the expansion of a root to a base set.

Figure 2‑3: Expanding the root set into a base set [33]

For practical reasons, Kleinberg allowed each root to contribute at most 50 new documents to the root set.

Kleinberg’s approach is unique in that it attempts to balance relevant sources and popular ones. Kleinberg uses the notion of authority and hub pages. A good authoritative page is referenced by good hub pages; whereas good hubs link to good authoritative pages. Understanding and tracking the quality of hub pages helps to better reflect to the quality of authoritative ones.

This mutually reinforcing relationship between hubs and authorities is represented in Equation: 2‑6 and Equation: 2‑7.

|

|

|

|

|

Where the vector / matrix ![]() is the

authority score for pages

is the

authority score for pages ![]() to

to ![]() and

and ![]() is the

hub score, where

is the

hub score, where ![]() and

and ![]() .

Finally,

.

Finally, ![]() is the

adjacency matrix of the graph outlining the references among

pages 1 to n. Much like

the Page Rank algorithm, an iterative algorithm will result in

the principle eigenvalues

of the normalized authority and hub matrix. Figure 2‑4 illustrates the process of calculating

the authority and hub values a page.

is the

adjacency matrix of the graph outlining the references among

pages 1 to n. Much like

the Page Rank algorithm, an iterative algorithm will result in

the principle eigenvalues

of the normalized authority and hub matrix. Figure 2‑4 illustrates the process of calculating

the authority and hub values a page.

Figure 2‑4: The basic operation of authority and hub value [33]

Kleinberg’s study found that the authority and hub values converge relative quickly after about 20 iterations.

Signer et al., in their study of software professionals [47] and [48], found that searching was the activity most often performed. As observed by Brin and Page [12], a simple text-based search (involving only page titles and link anchors) combined with a priority algorithm based on the link structure can result in relevant results. As such, we deem Kleinberg’s algorithms most appropriate in the context of software documentation. Combining a simple text based search to uncover the root set, Kleinberg’s equations can then expand the possible results to a base set. Using his authoritative technique to rank documentation, the results should be relevant based on the users needs. Kleinberg’s approach is also able to rank documents based on quality of the references they provide (gauged by having a high hub values). Not only can we use Kleinberg’s approach to predict highly relevant pages; we can also use it to predict highly indexed pages (that is, pages that index several relevant pages).

3

Chapter 3:

Survey on Documentation

A survey of software professionals was conducted as part of our research and is referenced throughout this thesis. This chapter highlights our motivation, research methods, participants demographics as well as an analysis of the questions and preliminary results of the survey. Refer to Appendix A to Appendix E for a listing of the survey questions and resulting data.

3.1 Survey Motivation and Objectives

Our motivation to conduct a survey of software professionals was to shed practical insight on the objectives outlined in Chapter 1, Section 1.2.

Tim Lethbridge, Susan Sim, and Janice Singer [37] describe software engineering as

“a labor-intensive activity. Thus to improve software engineering practice, a considerable portion of our research resources ought logically to be dedicated to the study of humans as they create and maintain software.” [37]

In search of answers, we performed a systematic survey to question the thoughts of software practitioners and managers. Our approach is to build theories based on empirical data; possibly uncovering evidence that questions our intuition and common sense about documentation and its role in software engineering.

The research objectives of the survey are to determine what makes software documents useful. As well, we will investigate how a document’s relevance can be measured using not only its own attributes, but also compared against other documents of the software system.

Our survey investigated several factors that may affect the use and usefulness of software documentation including:

· The technology used to create / maintain / verify software documentation.

· The software domain and size.

· The experience of the development team.

· The role of the management and development team with regard to documentation.

· The styles, standards and practices involved in the documentation process.

· The turnover time between software project changes to updates in related documents (i.e. the effectiveness of documentation maintenance).

· The technologies used to automate the documentation process.

By exploring how these elements contribute to a document’s relevance (by aiding or potentially hindering it), we hope to discover results that will help improve the entire documentation process.

Before conducting the main survey described in this paper, we conducted a pilot study to help develop and refine the questions.

The pilot-study participants were sampled from a fourth year software engineering course offered at the University of Ottawa in the winter 2002. Most participants had some experience in the software industry.

The official survey, conducted in April 2002, featured fewer and more concise questions with an improved sampling approach that is described in the following subsection. All participants had at least one year of experience in the software industry; several had over ten years experience.

Individual responses and identifying information have been withheld to protect confidentiality. The University of Ottawa’s Human Subjects Research Ethics Committee approved the conducting of the survey.

The survey was available online at [21] and is outlined in greater detail in appendices Appendix A to Appendix D. Bill Kalsbeek’s work in [31] provided insight in our survey research methods described below. The survey introduction outlined our research goals and objectives. Participants could then log into the system creating a user id and optionally providing a password, or continue anonymously. In creating a user id, individuals could save their responses and return at a later date to complete the survey. Participants were then asked to read and agree to our Informed Consent statement if they wished to continue. Once the consent was complete, the participants were able to answer the survey questions.

The data were stored in a database and exported to a spreadsheet application for subsequent analysis.

Participants were solicited in three main ways. The members of the research team approached:

· Management and human resource individuals of several high-tech companies. They were asked to approach employees and colleagues to participate.

· Peers in the software industry.

· Members of software e-mail lists. They were sent a generic invitation to participate in the survey.

Most participants completed the survey using the Internet [21]. A few replied directly via email. There were a total of 48 participants who provided responses that were complete and contained valid data.

The participants were categorized in several ways based on software process, employment duties and development process as outlined below.

We divided the participants into two groups based on the individual’s software process as follows:

· Agile. Twenty-five individuals that somewhat (4) to strongly (5) agree that they practice (or are trying to practice) agile software development techniques, according to Question 29 of the survey.

· Conventional. Seventeen individuals that somewhat (2) to strongly (1) disagree that they practice agile techniques, or indicated that they did not know about the techniques by marking ‘n/a’ for not applicable.

Our rationale for the above division is that the proponents of agile techniques promote somewhat different documentation practices from those recommended in conventional software engineering methodologies. The dominant characteristics of an agile participant include test-first development strategies, pair programming, little up-front design and strong communication bonds with other developers, managers and customers. There is somewhat of an unfounded stigma that agility assumes documentation is not useful and should not be a component of a software project. Some have suggested that the participants identifying themselves as agile are merely those individuals that avoid documenting their work. The suggestion that the agile participants are merely lazy in their work-habits towards documentation is unlikely. The primary message of agility is to do what is most suitable assuring that an appropriate safety-net (i.e. test-first strategies) is available to support change. The decision to allocate fewer resources and less effort to documentation is not one of lack of effort, but rather one of cost-benefit between developing software and describing it [1].

In addition, we divided the participants based on current employment duties as follows:

· Manager. Twelve individuals that selected manager as one of their current job functions, according to Question 44.

· Developers. Seventeen individuals that are non-managers and selected either senior or junior developer as one of their current job functions, according to Question 44.

Finally, we divided the participants based on management’s recommended development process as follows:

· Waterfall. Thirteen individuals that selected waterfall as the recommended development process, according to Question 46 of the survey.

· Iterative. Fourteen individuals that are non-waterfall participants and who selected either iterative or incremental as the recommended development process, according to Question 46 of the survey.

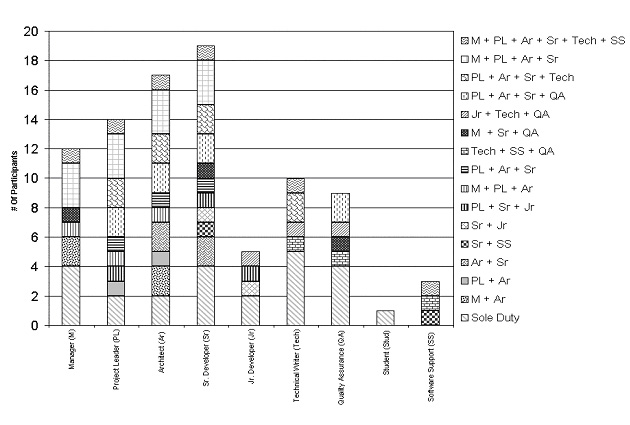

Figure 3‑1 outlines the number of participants in each category outlined above. The number of participants not included in a particular category is also highlighted. For instance, project leaders, software architects and technical writers comprise the 19 individuals not considered in the Manager / Developer categorization.

Figure 3‑1: Survey participants’ arranged by category

The following observations were made with respect to the category numbers shown above.

· There were slightly more agile participants than conventional participants. The individuals identified as agile agreed that they practice or they are trying to practice agile techniques. These individuals were not necessarily involved in strictly agile projects, but rather applying agile techniques in their personal software habits. Please refer to Figure 3‑5 for more details about the software processes of the participants.

· Nearly half of the participants were not considered as developers or managers. It is important to note that software architects, project leaders, quality assurance individuals and technical writers comprise the individuals not included in the Manager / Developer group. Please refer to Figure 3‑4 for more details about the participant’s employment duties.

· Several individuals are practicing software processes other than the conventional waterfall and incremental / iterative approach. Please refer to Figure 3‑5 for more details about the other software processes of the participants.

This section will describe the participants’ demographics. The divisions separate individuals based on software experience, current project size and software duties. The purpose of this section is to show that the survey was broadly based, and therefore more likely to be valid in a wide variety of contexts.

Figure 3‑2 illustrates the participant’s experience in the software field (based on number of years in the industry). Please note that one participant did not answer this question and is not considered in the percentage calculations.

Figure 3‑2: Participants' software experience in years

Table 3‑1 highlights the participants’ current project size. The size is estimated in thousands of lines of source code (KLOCs). Please note that two participants did not answer this question and are not considered in the percentage calculations.

Table 3‑1: Project sizes in thousands of lines of code (KLOC)

|

|

Project Size (KLOCs) |

Number of Participants |

Percentage |

|

|

|

< 1 |

0 |

0 % |

|

|

|

1 to 5 |

1 |

2 % |

|

|

|

5 to 20 |

13 |

28 % |

|

|

|

20 to 50 |

6 |

13 % |

|

|

|

50 to 100 |

5 |